TL;DR

If you can’t deploy on Fridays with confidence, your next priority should be fixing what’s broken in your deployment process, not avoiding Fridays. Exceptions apply, use common sense.

Friday, Deploy Day

Now that the holiday season is over (until how late in January can you say happy new year?), it’s time to start thawing the winter deployment freezes.

Never deploying on Friday has become software engineering lore. However, if you’re always afraid to deploy on Fridays, you have bigger problems.

If you don’t feel confident then don’t deploy, that is reasonable. Use your common sense. But identifying and fixing whatever is reducing your confidence should go high on your priority list. This isn’t about bravado; it’s about building systems that work reliably, predictably, repeatedly, and to an extent, autonomously.

Building Deployment Confidence

Confidence doesn’t come from vibes, it’s an outcome. It comes from actually catching problems before they hit users and keeping the damage small when things do go boom.

Some of the practices that I have used in the past to overcome deployment fears are:

Test Coverage that Matters

Not just high percentage coverage, but tests that actually catch regressions before they hit production. Your test suite should give you genuine confidence covering your key scenarios, not just a false sense of security.

Reliable Observability

Availability and Performance SLOs (topic for another day) on the things that actually matter to your users. Alert fatigue from false positives is a deployment killer. If every deploy triggers warnings that turn out to be nothing, you’ll stop listening to your monitoring right when you need it most.

Common Sense over Dogma

Obviously there are exceptions, not every change carries the same risk. Deploying a database migration at 6PM on Friday before a three-day weekend? That’s foolish. These high risk deployments should be rare though, not the common scenario.

Did we stop deploying this season? Nope, business as usual.

If It Hurts, Do It More Often

Now I’m not advocating for self-inflicting pain. The deployment problems you’re trying to avoid will compound over time. Technical debt is no joke, and that applies to your deployment/monitoring code, not just feature code.

Things don’t get better by waiting. They get worse as code is sitting, waiting to be deployed and more changes keep queuing up. What could have been several small, routine deployments turns into one big bang deploy.

Instead, chip away at the problem in each deploy:

- Improve the deployment process. Automate manual steps, improve the pipeline speed, and decrease the time of feedback loops.

- Strengthen observability and detection. Know when something breaks, immediately and accurately.

- Practice recovery until it becomes muscle memory. Fix forward, turn a feature flag off, rollback if needed.

A deployment should be a non-event. It just happens without thinking about it, just like breathing. The ceremony and stress we attach to them is a symptom, not a feature.

I’ve seen this play out firsthand. I still remember the time when code changes were queued, and releases happened every few weeks. If you miss the train, don’t worry, another one will be around in two week’s time. This caused nervousness in the team and disruption as there was always something broken after a release and devs had to go and help troubleshoot with the ops team.

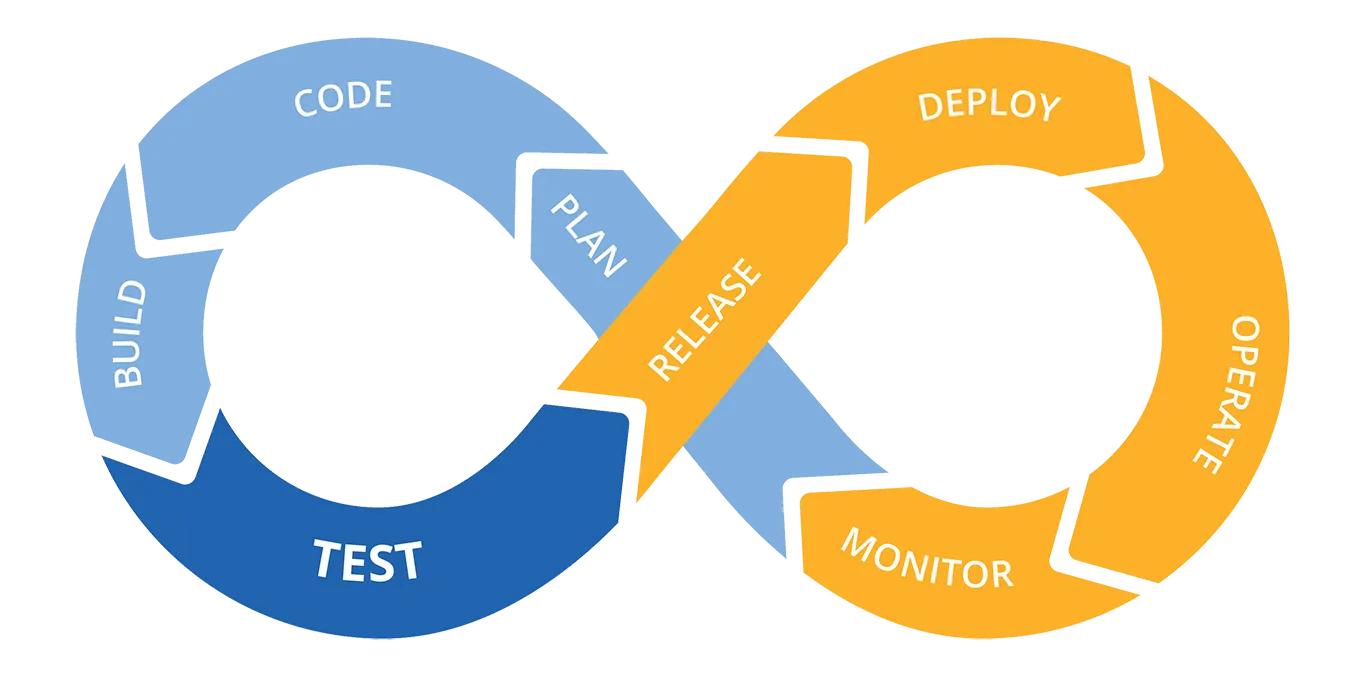

Once we started adopting continuous delivery practices, the release anxiety disappeared. SLO’s were our main success indicator. Improvements of DORA metrics were a non-functional requirement of all product features.

Deploy Isn’t Done Until You Monitor It

The work isn’t complete when the pipeline is green; it’s complete when you’ve verified it’s working correctly in production.

Remember that deployment is different to release. You can deploy code behind feature flags, gradually roll out changes, and decouple technical deployment from user-facing releases.

Continuous delivery is a solved problem. The techniques, tools, and practices for reliable continuous delivery have existed for years. If you’re not doing it, it’s not because the technology doesn’t exist.

The True Cost of Fear-Driven Development

Fear-driven development creates a vicious cycle:

- “Don’t deploy Friday, we might break something”

- Next week is a short four day week with people out

- The week after has critical customer commitments

- Weeks later, you have a code integration nightmare

Each delay makes the next deployment riskier. The diff grows. The surface area for regressions expands. What could have been three small, manageable deploys becomes one terrifying big-bang release.

Undeployed code is unsold inventory. It’s value you’ve created but haven’t delivered. It’s code that hasn’t been validated by real users. It’s technical debt accumulating interest.

Change Freezes: the exception, not the rule

Change freezes during holiday seasons, or critical product launches are reasonable. The key word is exceptional. These should be:

- Rare and well-defined

- Time-boxed

- Followed by retrospectives asking: “What changes would let us safely deploy even during these periods?”

If every week has a reason why “now isn’t a good time,” your deployment process is the problem.

The Path Forward

Well, adopt the process that works for you.

Ultimately you know your software, your databases, your pipelines, your observability, your teams, and your leadership better than I do. When releases don’t suck, everything else gets better. There’s actual research backing this up. Remember that how quickly and safely you can deliver software into the hands of a paying customer is a sign of operational maturity.

The real question isn’t whether to deploy on Fridays or not, it’s whether your deployment process is mature enough that it doesn’t matter.

For me, these are a few of the practices I’ve put in place in several organisations that helped reduce deployment anxiety:

Start by Making Deployments Boring

If a deployment requires a calendar invite, a checklist, or a specific person to be online, that’s your first problem to fix. Aim for an automated deploy on merge to main that anyone on the team can run with confidence.

Lower the Blast Radius by Default

Deploy behind feature flags. Release changes gradually. Prefer small, reversible changes over large, all-or-nothing releases.

Measure What Actually Matters

Track SLOs and error budgets, not just deploy counts. Use DORA metrics as signals, not goals, and use all of them together: multiple daily deployments are not helpful if your change failure rate is 50%.

Reframe Technical Debt as Technical Improvement

Treat deployment problems like real bugs, not something to deal with ‘someday’. If a deploy was problematic, have a retro to capture why and fix them as part of the product backlog. Small improvements, applied consistently, compound quickly. This dramatically increased the speed of deployment, from hours to minutes.

Align with Leadership Early

Get leadership on board early. They need to understand that deploying often is actually safer, not riskier. And if you need approvals? Automate them into the pipeline so they don’t slow you down.

This was important in a regulated industry where CAB met the day before the deployment. Moving this process to before a feature was developed allowed us to implement continuous delivery in a tightly regulated environment.

The Gut Check

Ask yourself:

- Can anyone on the team deploy without heroics or tribal knowledge?

- Are deployments small, frequent, and easy to reverse?

- Do you know within minutes if a deploy actually broke something users care about?

- Are alerts actionable, or mostly noise?

- Can you turn off or roll back a bad change quickly without redeploying?

- Does deployment pain result in fixes, or just longer gaps between releases?

- Are SLOs and delivery metrics treated as product concerns, not ops-only problems?

If most of these are “no” or “sometimes,” avoiding Fridays isn’t the solution. Improving the system is.

Frequent, low-risk deployments are a sign of operational maturity. When the process is healthy, the day of the week stops mattering.